Did you know that there exists on campus at least one mad scientist’s lair? That is to say, what appears to be a mad scientist’s lair (don’t want to blow anyone’s cover). There are probably many more besides, but Dr Jacob Rosen’s Bionics Lab looks like a set for an upcoming Ridley Scott film called The Scientist’s Lair or something. And I mean that in the best way possible.

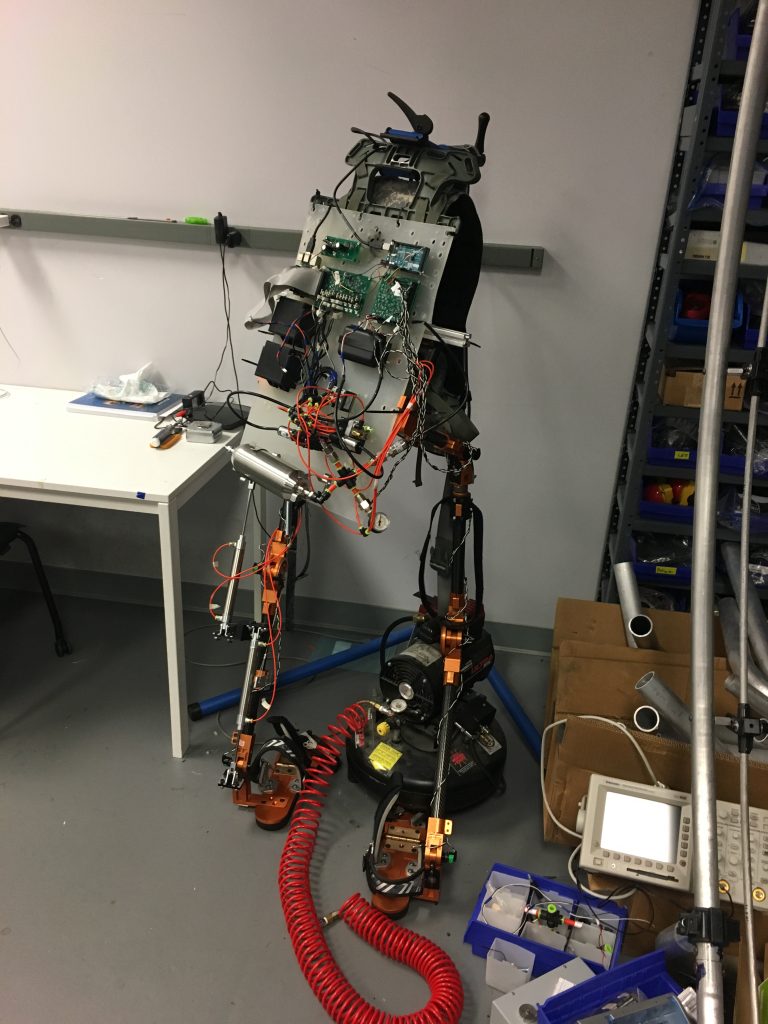

Strewn about the lab is a smorgasbord of stuff. Bits, pieces, bits of pieces, pieces of bits, and, of course, full and partial sets of exoskeletons are strewn about the lab in a manner befitting the minds that combine medicine, physiology, neuroscience, mechanical engineering, electrical engineering, bioengineering, and whatever else I’m missing (which, admittedly, is a lot). (Incidentally, those seem to be the recurring dual-themes of these lab visits: brilliant minds with broad specializations and me missing stuff.)

When you first enter the space, it’s not graduate students wearing exoskeletons that jump out at you, but rather a big, giant plaster-like vertical dome thingy. That was the technical term they used, I believe. Further back in the lab you can see metal scaffolding in much the same shape; these both serve a similar purpose but you could say that one is more…stucco in place than the other. More on that later.

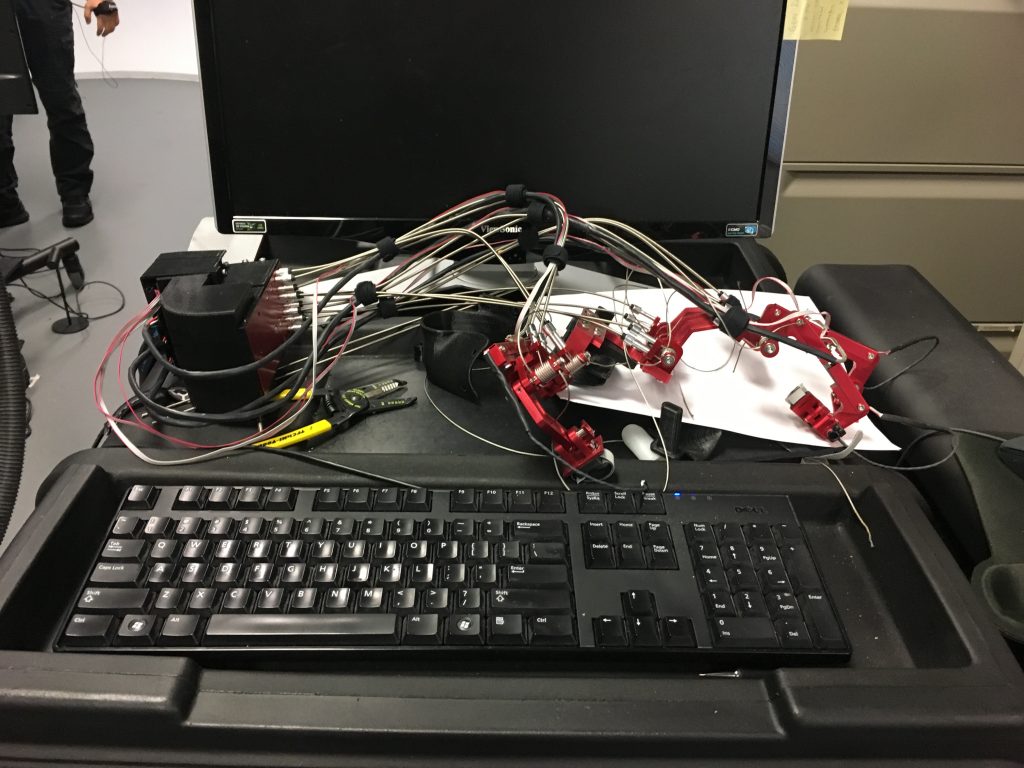

I was greeted next to the dome by Dr Ji Ma who was busy making minor adjustments to the four projectors that work together to produce an image up to 4k in resolution. However, Dr Ma was more keen to show me his nifty invention close by: a set of wearable inertia measurement unit, or IMU, sensors. As with the other equipment I was introduced to, these devices were designed to help in the physical rehabilitation of stroke patients.

The wearables were wireless and prototypical, and each contain accelerometers and gyroscopes in order to record the speed and range of movement of the subject.

Like the exoskeleton I’m about to talk about, the idea behind these devices is to aid in the rehabilitation of upper-body movement of patients who have suffered brain damage from a stroke. But given how small these sensors will end up being, and how quick and accurate the response-time was, these devices can easily find their way into other applications in the XR world.

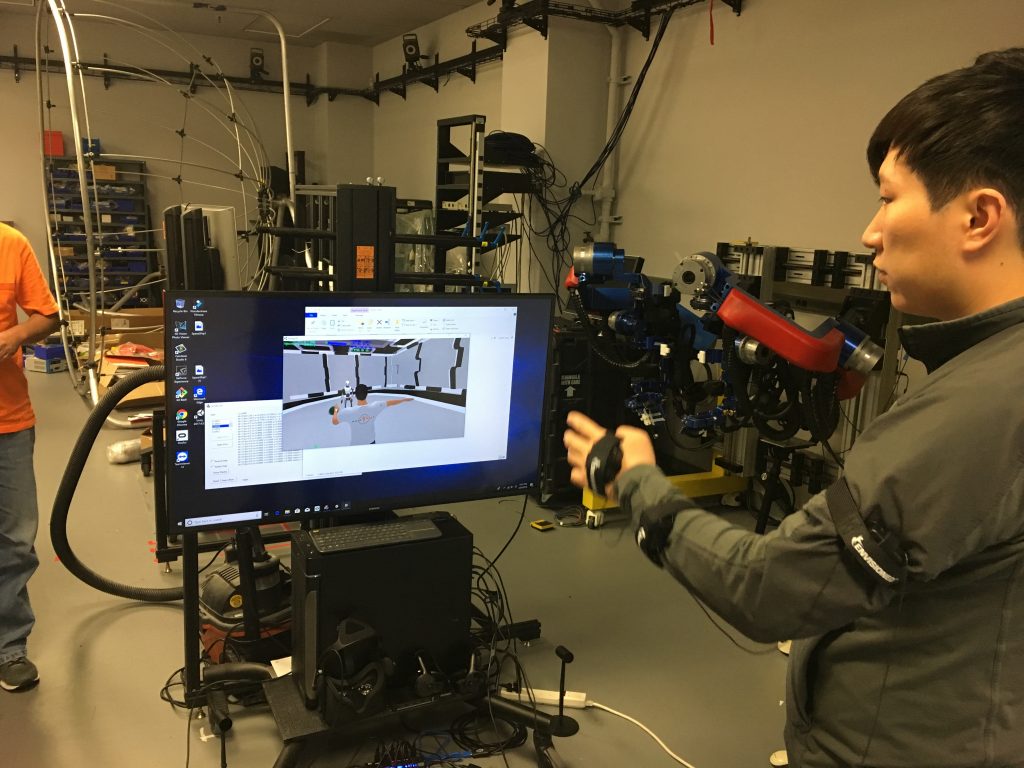

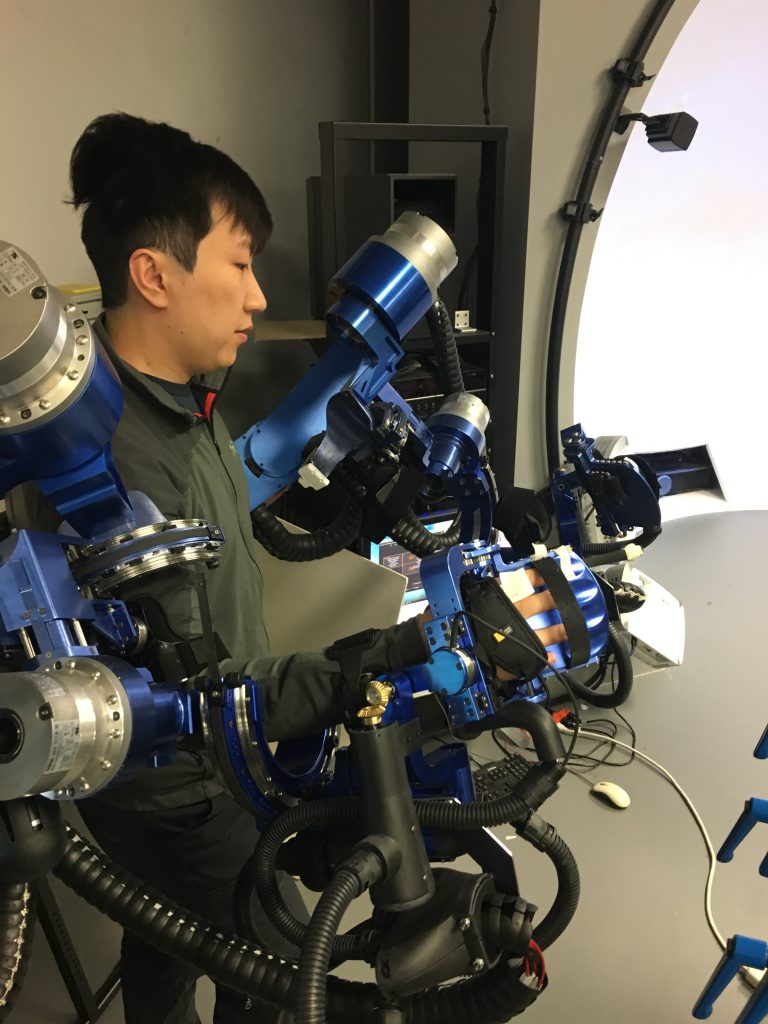

Astute readers have noticed that in the image of Yang demonstrating the IMU sensors there sits in the background what appears to be a brilliant blue exoskeleton. And so it is!

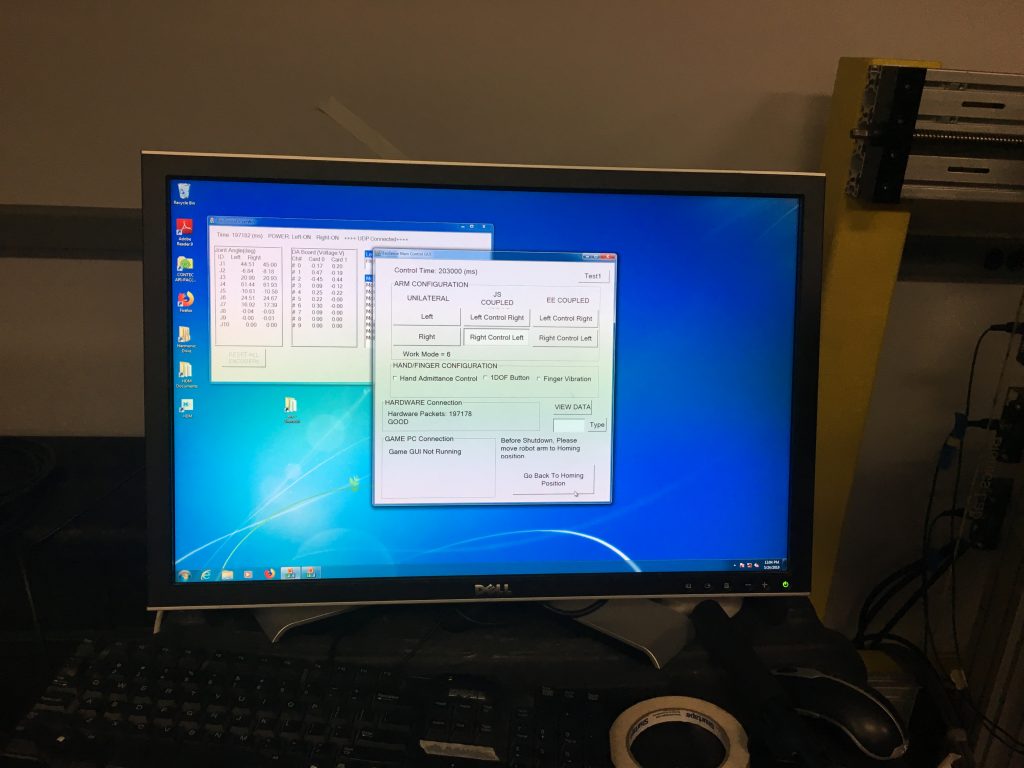

Before I get into what is going to be the grossest of oversimplifications, I want to give a special thanks to Yang Shen, a Ph.D. candidate who’s been with the lab for several years now, for being so patient with me and going over this incredible device for me. So, as I said, the device is used for Mirror Image Bilateral Training. Stroke patients often suffer damage to one or more hemispheres in the brain. When it’s one or the other, movement can become impaired. Based on the theory of neuroplasticity (that means you can make new neurons and new neural pathways), this device is meant to strengthen the damaged hemisphere by allowing the healthy side to provide the proper and/or full range of movement.

Impaired movement as result of a stroke has to do solely with brain damage, not damage to the muscles (eg muscular dystrophy) or nerves (eg spinal injury). Because of this, it is believed that thinking command thoughts to move your arm coupled with the arm actually moving in the way it’s meant to help rehabilitate the damage hemisphere.

The device also allows for pre-programmed movements (as by a physical trainer, for example), is height- and width-adjustable, consists of three harmonic drives and four Maxon D/C motors for a full range of seven degrees of movement, but only comes in one color. Priorities, Dr Rosen, priorities. Where’s my candy red model?

Remember those nine blue handles I mentioned earlier? No? Well you’re not a very close reader, are you? (Hint: caption, third image)

Pfft, you believe that guy? Anyway, those nine blue handles sit directly across from The Blue Bonecrusher. They’re part of the studies that the lab has been conducting with the exoskeleton, and will also factor in to how this all relates to VR, because as awesome as all of this is, it ain’t exactly the point of this, now is it? As you might have already guessed, the idea here is for patients to reach out, grab the handles, and turn. A healthy person will use up to six degrees of freedom to perform this task, even though a human arm comes with seven. This seventh degree is known as redundancy, not unlike my job in a few years’ time.

Stroke patients often do not lose all seven degrees of freedom. As such, although they may be capable of reaching out and grabbing the handle or otherwise capable of limited movement, the danger lies in reinforcing bad habits. Enter, once again, The Blue Bonecrusher. Instead of allowing patients to unduly rely (and thus reinforce), say, five degrees of movement, the exoskeleton guides and assists patients through a healthy range of motion.

Yang’s study on goes something like this (and brings us tantalizingly closer to the whole connection to XR). Let’s assume that a healthy range of movement involves an arm moving from point A to point D, passing points B and C along the way. Now let’s assume that a stroke patient has difficulty with moving their arm from point A to B and from C to D, but has no difficulty moving from point B to C. Yang’s programmed the Beast to assist only during those portions of the range from A to D that the patient struggles with. Yang called it Asymmetric Bilateral Movement or “Assist as Needed” movement.

Now, that’s impressive enough as it is. But the folks in the Bionics Lair (I’m just renaming everything now) like to be precise, and like so many scientists, they like to measure stuff. A lot.

In order to more accurately program the machine, the Bionics Lair uses these motion capture cameras to record instances of a healthy human reaching for the handles and turning them (or instances of a stroke patient using their healthy hemisphere to make the move). Then, frame by frame (which translates to something like millisecond by millisecond), the researchers can measure each joint’s position relative to the other joints, ultimately plugging all of that information into the Beast.

But that’s not all! As part of Yang’s Assist as Needed study, he incorporated visual stimulation in a virtual reality environment. After all, there’s more to life than just reaching out, grabbing a random handle, and turning it. Yang provided patients with a variety of virtual objects to reach towards and interact with, tying a virtual ‘string’ between the patients palm and the object, pulling on the patient’s arm with the string to assist with the movement as, you guessed it, as needed. Yang’s not bad at naming stuff, I’ll admit, but I think I’m better.

If you’re anything like me, you’ve sometimes gotten a bit queasy when trying on a VR headset. Now imagine you’re not just a recovering stroke patient, but more than likely a geriatric one who is about as familiar and comfortable around cutting-edge technology as I am with my mother-in-law. Having said that, I bet none of you will be very surprised when I say that many of these stroke patients were getting nauseous while using VR headsets.

In an effort to keep himself, his lab, his equipment, and ostensibly his students vomit-free, Dr Rosen designed this dome to serve as a replacement for VR headsets. The dome is placed directly in front of patients, thereby giving them near-total immersion while still allowing them to see the ground underneath them. Seeing the ground beneath them helps the patients to stay oriented and to keep their lunches from making a reappearance. The white dome is a fixed prototype; the scaffolding I mentioned and pictured earlier, is meant to be more portable.

I call dibs on whatever Dr Rosen’s Bionics Lair cooks up next because it’s sure to be some kind of exoskeleton. And who doesn’t want an exoskeleton?

Internal skeletons are so passé. By the way, check out Yang’s website for more info about the work he’s doing: https://yangshen.blog/

For further reading that is far above my ability to comprehend, Yang has shared with me some of the published work that I mentioned above:

https://www.sciencedirect.com/science/article/pii/B978012811810800004X

Y. Shen, P. W. Ferguson, J. Ma and J. Rosen, “Chapter 4 – Upper Limb Wearable Exoskeleton Systems for Rehabilitation: State of the Art Review and a Case Study of the EXO-UL8—Dual-Arm Exoskeleton System,” Wearable Technology in Medicine and Health Care (R. K.-Y. Tong, ed.), Academic Press, 2018, pp. 71-90.

https://ieeexplore.ieee.org/abstract/document/8512665

Y. Shen, J. Ma, B. Dobkin and J. Rosen, “Asymmetric Dual Arm Approach For Post Stroke Recovery Of Motor Functions Utilizing The EXO-UL8 Exoskeleton System: A Pilot Study,” 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, 2018, pp. 1701-1707.

doi: 10.1109/EMBC.2018.8512665

https://ieeexplore.ieee.org/abstract/document/8246894

Y. Shen, B. P. Hsiao, J. Ma and J. Rosen, “Upper limb redundancy resolution under gravitational loading conditions: Arm postural stability index based on dynamic manipulability analysis,” 2017 IEEE-RAS 17th International Conference on Humanoid Robotics (Humanoids), Birmingham, 2017, pp. 332-338.

doi: 10.1109/HUMANOIDS.2017.8246894

https://link.springer.com/chapter/10.1007/978-3-030-01887-0_53

Ferguson P.W., Dimapasoc B., Shen Y., Rosen J. (2019) Design of a Hand Exoskeleton for Use with Upper Limb Exoskeletons. In: Carrozza M., Micera S., Pons J. (eds) Wearable Robotics: Challenges and Trends. WeRob 2018. Biosystems & Biorobotics, vol 22. Springer, Cham.

doi: 10.1007/978-3-030-01887-0_53