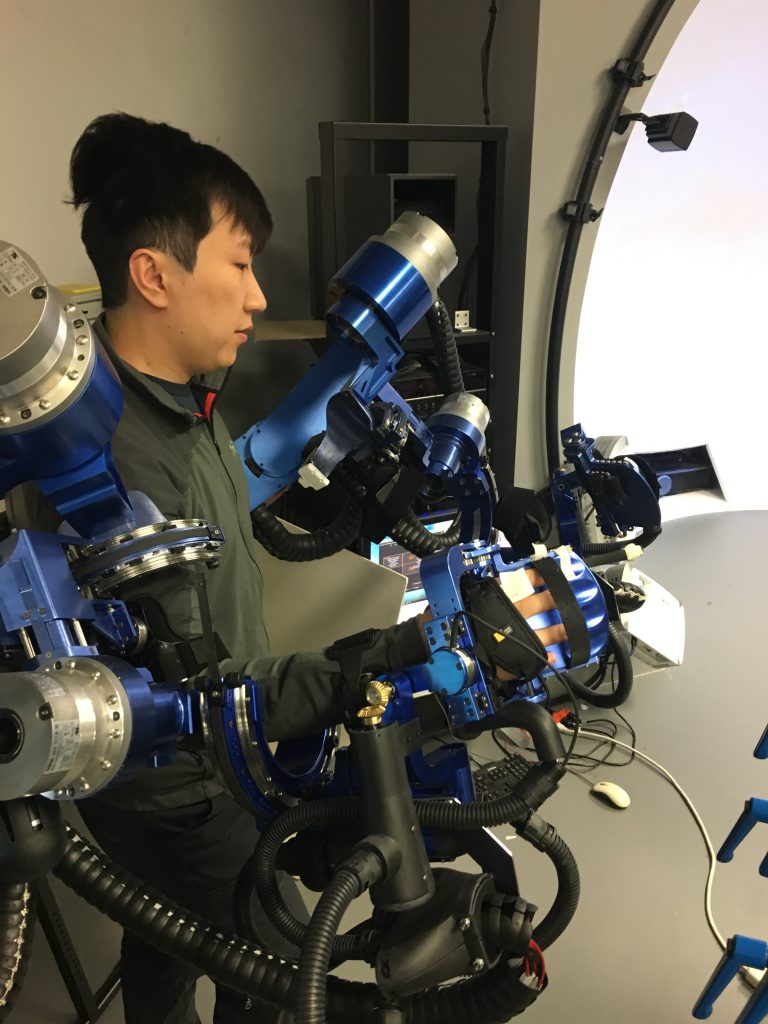

The first part of our guided tour took place in the space pictured above. It was here that we first met our guides for the tour: Dr Randy Steadman, Medical Director and Founder, and Dr Yue Ming Huang, Education Director who has been with the Center for 19 of its 22 years. It was a very humbling experience, and we are very grateful to both of them for taking time out of their busy schedules to show us some of the work that goes on here.

The UCLA Simulation Center is located on the A-level of the very aptly-named Learning Resource Center, which is situated right in between the School of Medicine and the Ronald Reagan Medical Center. This central location serves the Center well, as medical students and professionals alike from all disciplines visit the Center throughout the year. Medical stuents, nursing students, residents, nurses, practicing physicians, respiratory therapists, etc., all come to the Center for training. 10,000 learners visit each year, totaling nearly 40,000 learning hours that the Center handles annually. For example, every medical student at UCLA does simulation scenarios at the Center every year of their study.

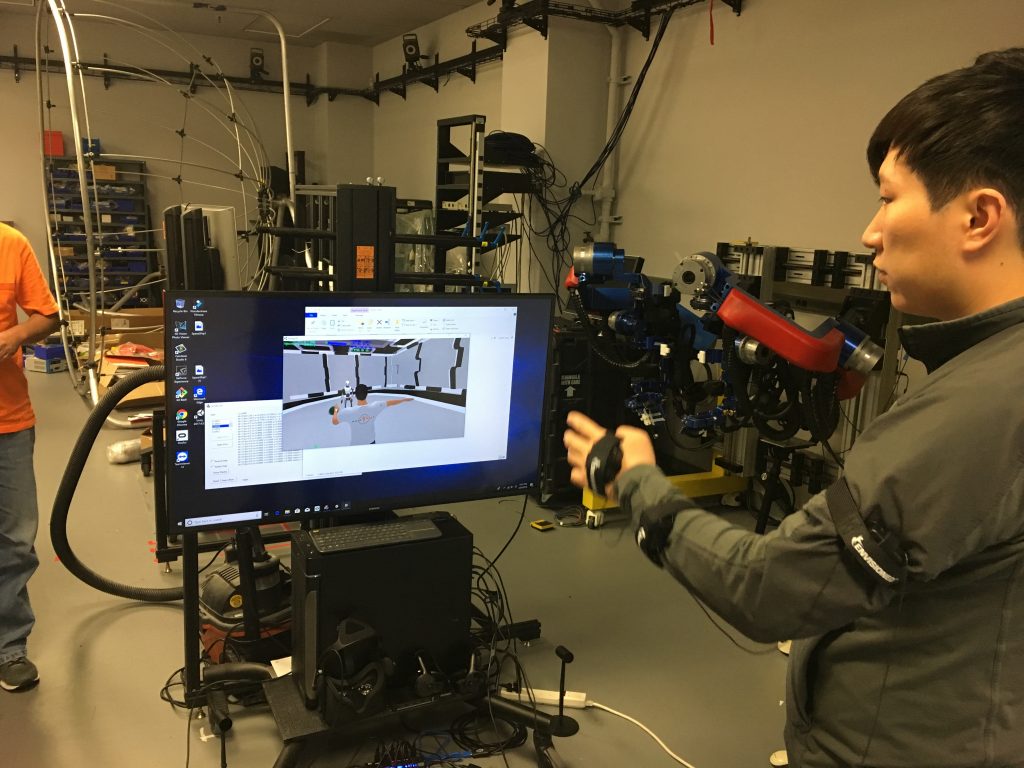

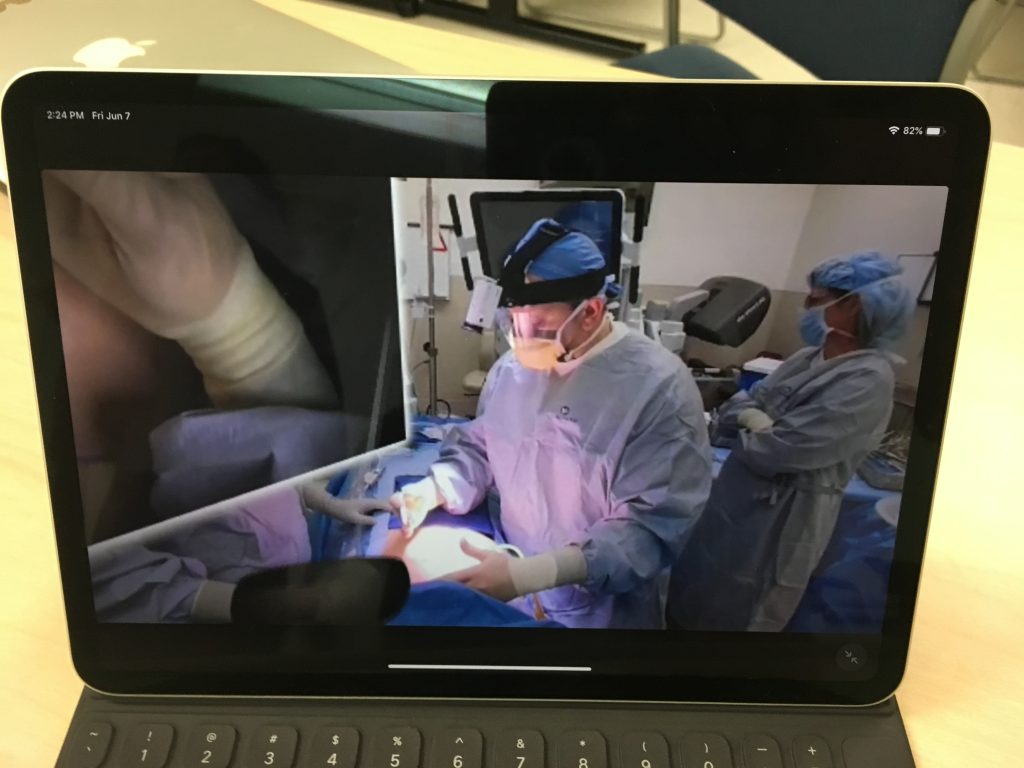

Although it only amounts to a small portion of the training regimen that students can look forward to undergoing here, the VR that we saw was simultaneously exciting and eery. It’s exciting to think that future medical professionals will benefit from the amazing potential that VR holds for medicine, both in terms of training as well as surgery prep and consultation. It’s eery because I am absolutely certain that this is exactly what an out-of-body-experience feels like when it happens outside of Coachella or Burning Man.

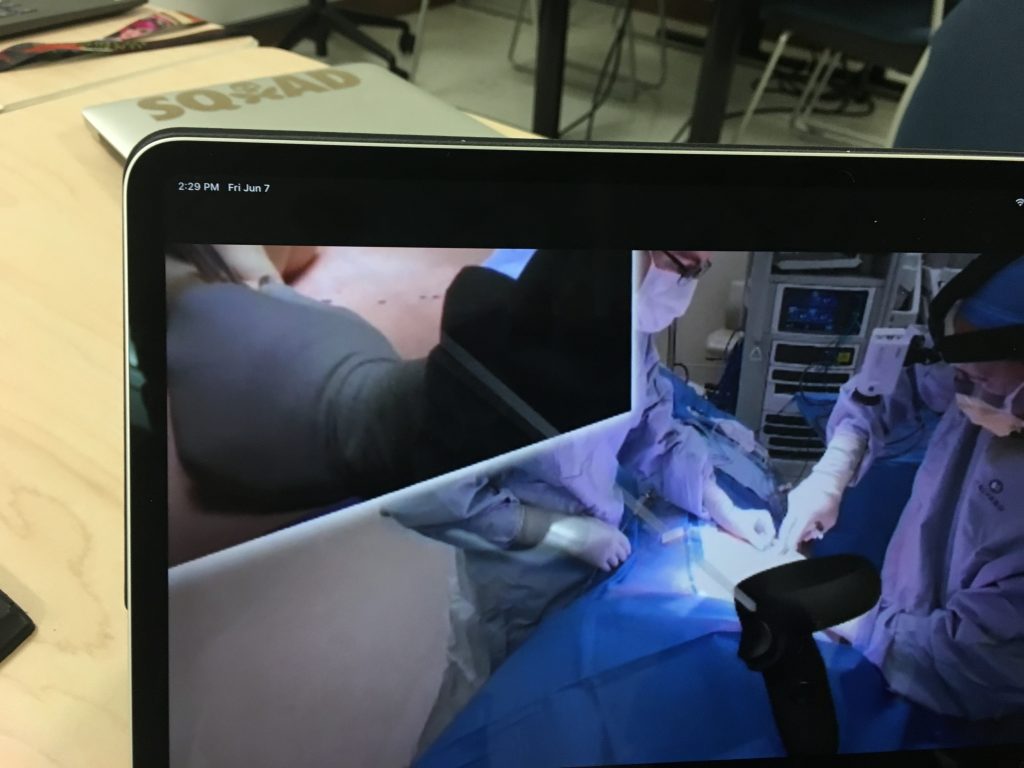

Contrary to what you may be thinking, the above image is not your typical OR. This is actually what you experience in one of the VR environments we were shown: a 360 video of a live operation, often narrated by the expert surgeon conducting the procedure. As surgeons who are reading this may already know, it is not often a good idea to have massive, 100″ TV’s suspended above a patient during an operation. You might say that would go against standard operating procedure…

These VR demos were hosted by a partner of the UCLA Sim Center, a company called GibLib. (At the time, I didn’t think to ask about the name, but thinking about it now, there’s a part of me that hopes the name is a concatenation of a piece of gaming vernacular and a truncated form of Library.) GibLib is a company that hails itself as the Netflix of medical education, a comparison with which I wholeheartedly agree! Both Netflix and GibLib have extensive video libraries, which in GibLib’s case means hundreds of 360 videos showing a large variety of different surgical procedures, often with a brief intro video from the surgeon that will be performing the operation (no Anime, I asked). Both Netflix and GibLib are branching out and beginning to generate their own content, which in GibLib’s case means medical lectures. And finally, you can subscribe to both companies for a trial period, after which you pay a monthly fee.

“Hey honey, what do you want to watch tonight?”

“Oh, I dunno, I really liked that one where Dr Sommer performed a carotid endarterectomy. She really nailed it.”

“Again? Let’s see what else there is. Oh look! It’s a new lecture series by Dr Kim all about Amygdalohippocampectomies.”

Personally I’ve always found Dr Kim’s demeanor too casual given the seriousness of amygdalohippocampectomies, but to each their own. Don’t let that dissuade you, though. After all, I’m the guy that asked GibLib if they had any 360 Anime videos in their library. And that was only after I paid for one year’s subscription.

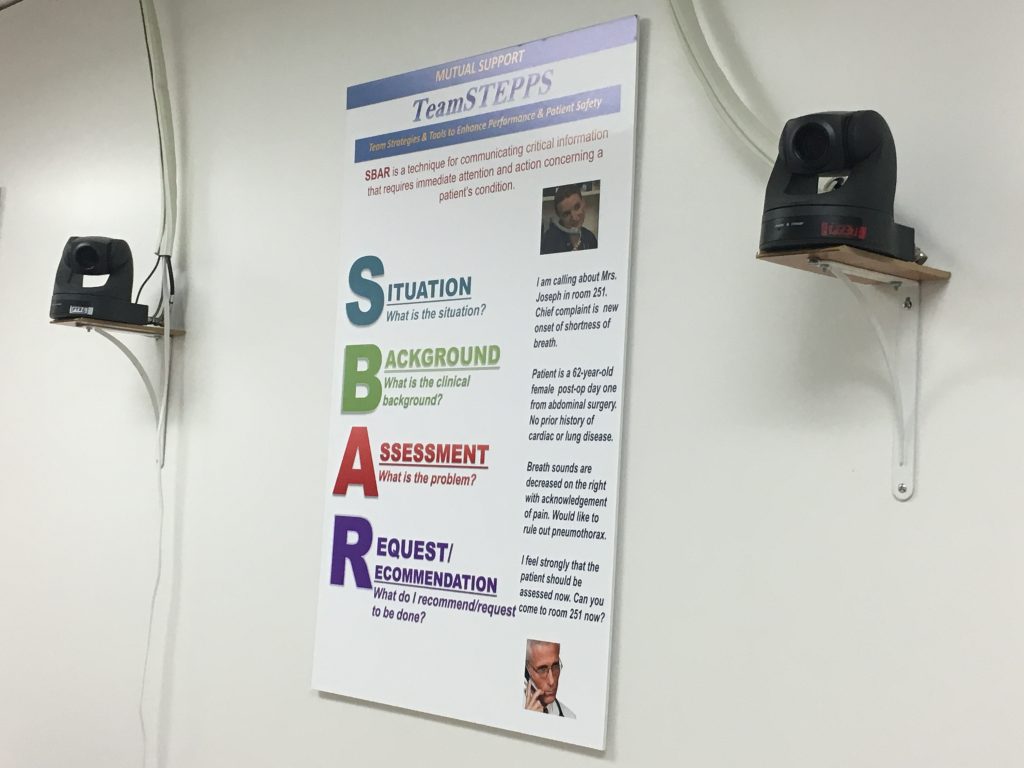

Before moving on to all the awesome simulation rooms, I want to mention briefly why the room was ornamented with about 10 of these cameras. Inspired in part by a previous study conducted for the Department of Defense, the Sim Center was teaming up with GE to develop an algorithm that could rate a doctor’s bedside manner by reading their nonverbal communications (body language). The prior DoD study worked with US soldiers working in Afghanistan as they went from village to village and tried to build a rapport with the inhabitants. Although interactions between doctors and patients often take place in cooler, less-sandy environments, the stakes can be just as high if you or a loved one is receiving some devastating news. Studies that grade bedside manner may seem a bit Orwellian (“ten-point deduction, the smile never reached your eyes, Kevin”), but any feedback that doctors can get to improve the patient experience is a good thing. On a related note, progress made in this study is sure to benefit greatly from advancements in VR, facial tracking, and eye tracking.

Reluctant though the group was, we had spent over half of the tour taking turns with GibLib’s VR demo in between stimulating conversation with Drs Steadman and Huang. (Because of my tendency to often write these posts in a sarcastic, almost glib tone that belies the fact that I’m writing for an audience consisting of my professional colleagues, I have to take the time to say that I was not being sarcastic just now – conversations with both of our guides were always engaging and illuminating.) Anyway, as I was saying, we had used quite a lot of our allotted hour in just one place and it was high time that we moved on, because, as we were about to learn, the Sim Center is much more than just one room.

Related side note: it is, in fact, also more than just one floor! Thanks to an incredibly generous gift from this author, the Simulation Center is currently in the process of subsuming the two floors immediately above the A-level (Maxine and Eugene Rosenfeld are also helping to fund this transition). Now, as someone who spends any given portion of any given workday in any one of six possible locations, believe me when I say that both Dr Steadman and Dr Huang are looking forward to the aggregation of their disparate locations. Oh, sure, the good Drs claim that they both enjoy the walks in between buildings, that they both enjoy those brief moments they can spend outdoors. But let’s not kid ourselves: if either Dr Steadman or Dr Huang tell someone that they’re going to go for a walk in the Botanical Gardens for no particular reason, who’s to stop them?

Although the colored 3D heart on the left is an artistic representation of the heart inside the mannequin and not anatomically accurate, the black and white ultrasound image on the right is a real, live image. Also real is the faint purple cross-section shown on the left with the 3D heart. The cross-section moved as the ultrasound moved, giving the student a better visual reference of what area of the heart the ultrasound was currently imaging. Because as far as I can tell, that black and white ultrasound image could be the first images confirming a pregnancy or the location of a brain tumor. I must have been daydreaming during this part of the tour, otherwise I would say something really smart-sounding about left and right ventricles.

Ventricles? Why’d I start talking about…Right! I was talking about Control Rooms and Simulation Rooms! I’d blame my disorganized writing-style on a combination of too much caffeine and not enough sleep, but it’s always this haphazard.

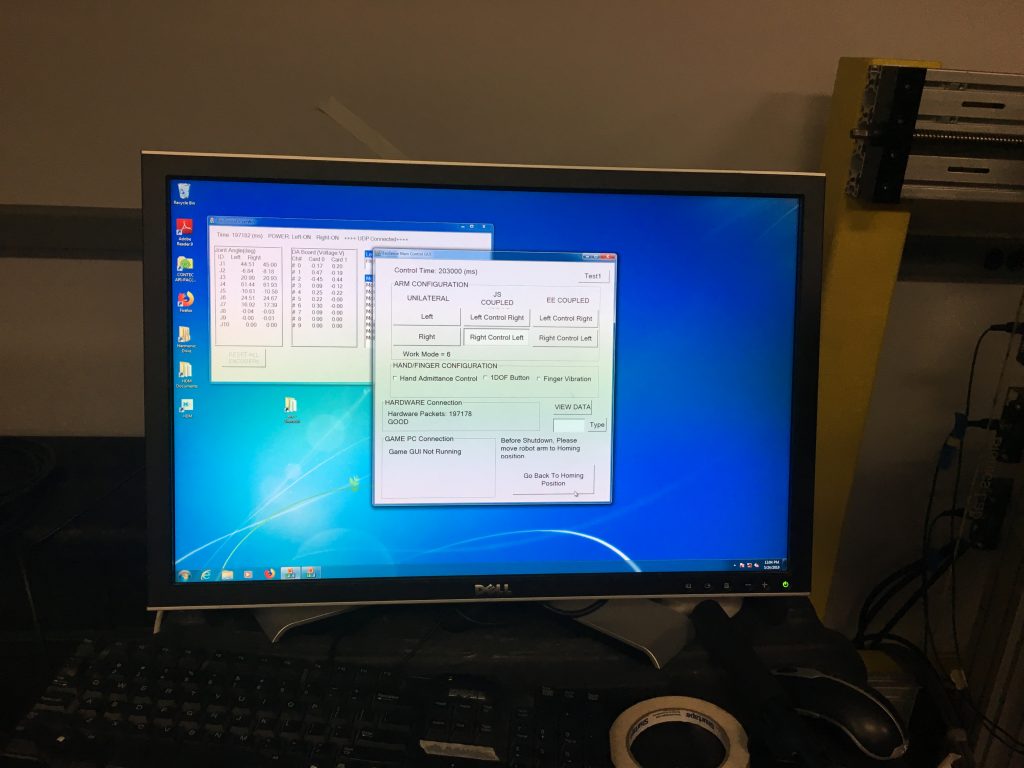

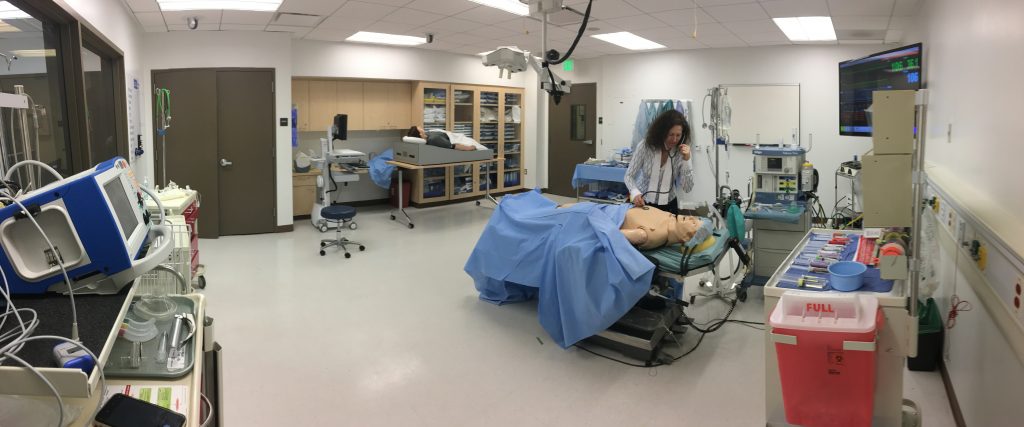

Pictured above is one of two control rooms that we saw, with this particular one being the larger of the two. It allowed for three sets of instructors to simultaneously conduct three separate simulation scenarios. Conduct and performance, in this context, are closer in meaning to their musical connotations than one might think. In any given simulation scenario, most everyone in the room was working off of a script and there was only ever one non-scripted person, the learner, in the room at any given time. (My assumption here is that they use the term “learner” because various scenarios played out in these rooms likely involve actors playing the part of a student, not because the Simulation Center can learn doctors real good.)

Although any given scenario would last no more than 15 minutes, the scripts that participants were given were quite detailed, often around 10-12 pages in length. In addition to the instructions meant to guide the performances, scripts could also contain one or two ‘branch points,’ in case the learner performed a specific action. (If the learner decides to perform procedure A, tell them that your foot is tingling, for example.) But as I was inquiring more about these branch points, Dr Steadman drove home the point that there would be few, if any, of these branch points. This was an incredibly specific, high-level exercise meant to test a learner’s ability and knowledge on key points under very strict conditions. People like me are probably not the best candidates to participate in these performances, given our tendency to ad lib (as evidenced by this and other posts).

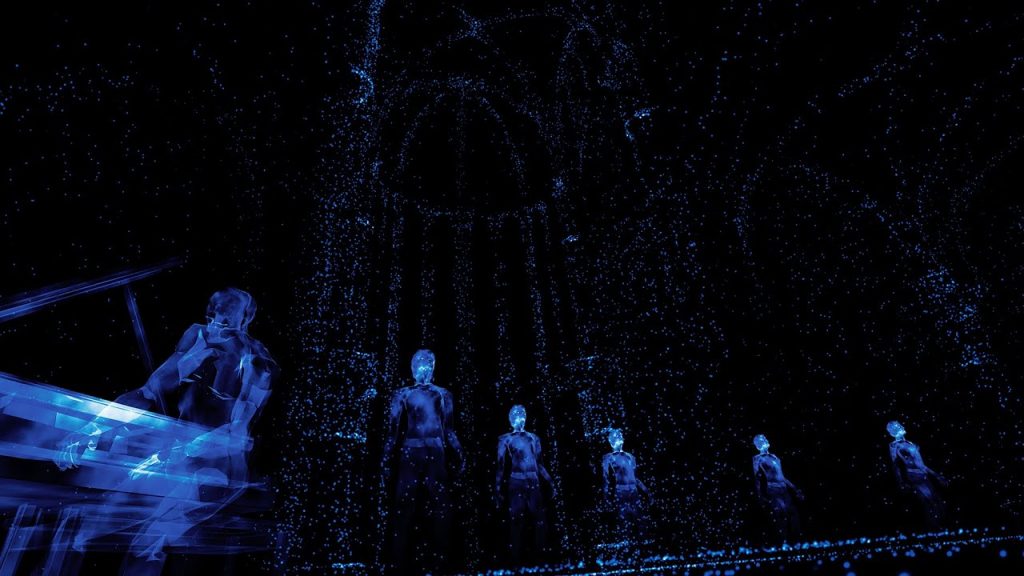

Although these rooms are equipped with cameras for monitoring and recording the performance for subsequent review, the potential exists to work with the Center’s push for more VR content for its students. In contrast to their real world counterparts, the Center could much more easily place 360 cameras in the Simulation Rooms to record simulation scenarios. Footage could then be quickly converted for use in a shared VR environment, allowing instructors an unprecedented, on-the-ground ability to train students on any portions of the performance that need to be addressed.

Additionally, because HIPAA regulations do not account for the privacy concerns of mannequins, permission to conduct these recordings would presumably be much easier to obtain. Thus, the Center has the potential to start building its own library of VR content while simultaneously establishing and fine-tuning the best practices and workflows required for recording simulation scenarios. And these best practices and workflows would be almost identical to real-life situations, assuming there comes a time in the future when the Center is able to overcome the myriad obstacles it currently faces in building its own 360 video library of surgeries for use in VR training simulations.

As with all of the places we’ve been fortunate enough to visit this quarter, the work we were shown at the UCLA Simulation Center was cutting-edge, state-of-the-art stuff. Similarly, as with every other place we’ve visited, there’s a feeling of latent potential, a sense of promise hitherto untapped. But rather than feeling discouraged, I’m excited. After all, such a lull in progress is to be expected if you believe in the Gartner Hype Cycle. We’re simply progressing through the Trough of Disillusionment towards the Slope of Enlightenment and onto the Plateau of Productivity. And the coolest thing about all this progress? It’s happening right here, in front of our very own eyes, all over UCLA. You just have to know where to look for it.

If nothing else, the UCLA Simulation Center can always fall back on my idea to simultaneously secure funding and raise awareness of the Center’s work: Halloween Fest 2019 at the UCLA Simulation Center. Imagine walking down all those hallways, surrounded by mannequins…with the lights off.